PostGIS and Multiprocessing

- artlembo

- Dec 10, 2018

- 5 min read

NYC taxi cab pickup locations for October, 2012

OK, let’s cut to the chase: I used Python’s parallel processing capabilities with Postgres to perform a spatial overlay on approximately 25 million taxi pickup locations (over 5 GB of data), and processed all of it in under 3 minutes!! There. Now you can decide if it’s worth your time to read this long post.

Hopefully you’ve been following my experimentation with using Postgres and PostGIS with large data sets. If not, you can read my last two posts here, and here. The long and the short of it is that by using spatial indexing, spatial sql, and spatial clustering, I was able to take a process with 12 million points that took over 1 hour in a traditional GIS, 10 minutes in PostGIS, and 3 minutes in a GIS utilizing parallel processing, and complete the task in under 2 minutes. My follow up post showed that using spatial sub-division, I was able to reduce that time down to 1 minute, 3 seconds! Please note, my computer is running low on space, so I moved all the data over to an external SSD – therefore, the times will be a little different.

It turns out, other than the tribute to my Dad, these were the most viewed posts in my blog’s history. And, there was lots of good discussion. One person commented that it would be nice to extend the analysis to multiple months. That was a great idea. But, worrisome, as I knew that would be a lot of data. And, with the additional data, I knew I might have come up with another strategy. Fortunately, I’ve had some experience with parallel processing and GIS that you can read about here, here, and here. In fact, you can watch a 2016 presentation I gave at Edinburgh University in Scotland here.

Postgres – a single threaded application

One problem with Postgres (pre 10.0) is that it only runs in a single process. So, it doesn’t matter how many cores your computer has, Postgres is only going to use one of them. There have been a number of attempts to leverage the parallel capabilities of Postgres 10 with some success, but oftentimes, it is difficult to determine the best parameters to achieve optimal results. Therefore, for now I am not going to focus on some of the parallel capabilities of Postgres 10.

In the past, I opened up multiple instances of PGAdmin III and was able to issue multiple queries, and each instance of PGAdmin III actually started another instance of Postgres. I wrote a blog post about it here, and called it a poor man’s parallel processor. The problem was, it is kind of a kludge jumping from each PGAdmin GUI, and hitting the run command. I attempted to run multiple instances of Postgres using other tools like dbLink to no avail – the other approaches still only had Postgres running in a single thread.

Parallel Instances of Postgres

Finally, I tried to give the multiprocessing package in Python 2.7 a go. In this case, Python has a library that allows you to easily perform parallel processing. By calling the multiprocessing object, you can run different functions in separate threads. So, theoretically, with 8 cores, I should be able to run 8 months of taxi data in the same time it took to run 1 month (well, nominally the same time).

The core query that makes it all happen

The parallel process essentially revolves around an SQL query that I defined in the previous posts:

INSERT INTO sumtable (geom, numrides, zone)

SELECT taxi_zones.geom, numrides, a.zone

FROM taxi_zones,

(SELECT taxi_zones.zone,

count(yellow_tripdata_2012_10.geom) AS numrides

FROM taxi_zones

JOIN yellow_tripdata_2012_10

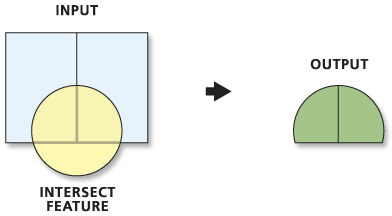

ON ST_Contains(taxi_zones.geom, yellow_tripdata_2012_10.geom)

GROUP BY taxi_zones.zone

) AS a

WHERE taxi_zones.zone = a.zone;This query finds all the pick-up locations in each taxi zone, and sums the number of rides, and groups it by the taxi zone. Because we’ll be running a variant of this query 4 times, I am inserting the results into a temporary table. Another SQL query takes the results of each of the other queries, and performs a similar summation, saving it to a new table:

SELECT sum(numrides) as numrides, zone

INTO sumtable2

FROM sumtable

GROUP BY zoneA mile-high view of the process

Once we expanded out our data from one month to 4 months, I had to follow the same process – load all the data into individual tables for each month, create a geometry column, spatial index, and clustering of the database. An in-depth discussion on how I prepared the data is a little beyond this blog post, but I will cover it in my next course which I plan to have completed by January 1, 2019.

Once the tables were ready, I then had to create a variant of the above query to account for the different table names (taxisandy is now called yellow_tripdata_2012_10, yellow_tripdata_2012_11, yellow_tripdata_2012_12, etc.).

Parallel Python

The next step was to call the multi-processor object in Python to simultaneously fire off all 4 months at a time, returning the results. With this blog post getting rather long, I’m going to hold off on a code-walkthrough, and jump to the results. Stay tuned for the code walkthrough next week.

It turns out that running October, 2012 on a single processor took 2m 27s (note, as I said, I’m actually running the data off of an external SSD as my office computer is running low on space, so these results should be compared relatively to one another). Running November, 2012 on a single processor took 2m 6s. But, here is the cool thing: running October and November takes almost the same amount of time! That’s right, October runs in one core, and November runs in another core, and it completes in 2m 10s. So, there is a level of multiprocessing going on. But, now I ran into another issue: I expanded it out to 3 cores and 3 months, and didn’t see the same kind of speedup. In fact, the time was 3m 24s. So, something is up. When looking at the performance meter, I noticed that my memory allocation was 4GB. It looks like 2 of the months can fit in 4GB, but the 3rd month doesn’t fit in there. Since Postgres is not using more than 4GB of RAM, the disk monitor is showing higher activity. When I only had 2 months, the disk activity was lower. BTW, I am using a 64-bit version of Python, and my computer has 12GB of RAM, so I will check further as to what is happening.

I’m still going to explore this more because the possibilities are incredible. And, what is even more incredible is that the multiprocessing capabilities only require 2 lines of Python code. Yes, you read that right – only 2 lines of Python (ok, 3 lines when you consider that you have to add import multiprocessor).

So, stay tuned. Next, we’ll walk through all the code together. And, in the meantime, I’m going to explore what that 4GB bottleneck is. If anyone has ideas, let me know.

Comments